This article shows how I govern my Logic App Alerts. A real-world video about alerts by Michael Stephenson prompts me to talk about this.

Our team have a mantra that says, “we will not lose an order”. As a result, of this we use alerts to monitor all our workflows. Indeed, or goal is to make sure that all our orders are submitted before cutoff times. Thus, we investigate any failure immediately and then resubmit it. Furthermore, alerts must be timely and sent to an engineer in the correct time zone because our business runs 24 hours by 365 days of the year.

Logic App Alerts Governance

All alerts for Integration services are sent to

- a slack channel

- an email group

- mobile number as an automated call.

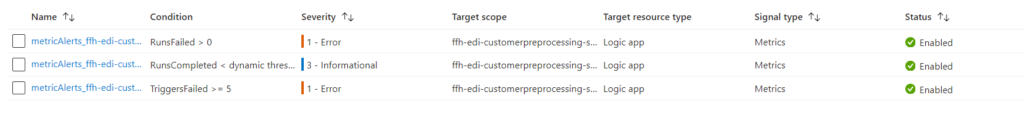

To begin with, two or three types of alerts are configured on each Logic App. An example is shown below.

- Alerts setup on every Logic App

Firstly, alert whenever a run fails. If any run fails an alert is generated.

Alerts are checked every 15 minutes.

Any Logic run failure with “customerstream” in the name must be investigated immediately because this could be a lost order.

{

"type": "microsoft.insights/metricAlerts",

"apiVersion": "2018-03-01",

"name": "[concat('metricAlerts_', variables('LogicAppName'), '-Failure')]",

"location": "global",

"dependsOn": [

"[resourceId('Microsoft.Logic/workflows', variables('LogicAppName'))]"

],

"properties": {

"description": "[concat(parameters('LogicAppLocation'), variables('LogicAppName'), ' Logic App Run Failures')]",

"severity": 1,

"enabled": true,

"scopes": [

"[resourceId('Microsoft.Logic/workflows', variables('LogicAppName'))]"

],

"evaluationFrequency": "PT15M",

"windowSize": "PT15M",

"criteria": {

"odata.type": "Microsoft.Azure.Monitor.SingleResourceMultipleMetricCriteria",

"allOf": [

{

"name": "1st criterion",

"metricName": "RunsFailed",

"dimensions": [],

"operator": "GreaterThan",

"threshold": "0",

"timeAggregation": "Total"

}

]

},

"actions": [

{

"actionGroupId": "[resourceId('microsoft.insights/actionGroups', variables('actionGroupName'))]",

"webHookProperties": {}

}

]

}

}- Alert when a trigger fails

Secondly, this alert should be set up on every Logic App that is polling trigger. For example, FTP or SFTP triggers.

If any trigger fails more than 5 times within 30 minutes an alert is fired. This runs every 15 minutes.

Any Logic App trigger failure with “customerstream” in the name must be investigated immediately because this could be a lost order.

{

"type": "microsoft.insights/metricAlerts",

"apiVersion": "2018-03-01",

"name": "[concat('metricAlerts_', variables('LogicAppName'), '-TriggerFailure')]",

"location": "global",

"dependsOn": [

"[resourceId('Microsoft.Logic/workflows', variables('LogicAppName'))]"

],

"properties": {

"description": "[concat(parameters('LogicAppLocation'), variables('LogicAppName'), 'Logic App SFTP Trigger Failures')]",

"severity": 1,

"enabled": true,

"scopes": [

"[resourceId('Microsoft.Logic/workflows', variables('LogicAppName'))]"

],

"evaluationFrequency": "PT15M",

"windowSize": "PT30M",

"criteria": {

"odata.type": "Microsoft.Azure.Monitor.SingleResourceMultipleMetricCriteria",

"allOf": [

{

"threshold": 5,

"name": "Metric1",

"metricNamespace": "Microsoft.Logic/workflows",

"metricName": "TriggersFailed",

"operator": "GreaterThanOrEqual",

"timeAggregation": "Total",

"criterionType": "StaticThresholdCriterion"

}

]

},

"actions": [

{

"actionGroupId": "[resourceId('microsoft.insights/actionGroups', variables('actionGroupName'))]",

"webHookProperties": {}

}

]

}

}- Alert when No events occur.

Thirdly, this should only be setup on high volume interfaces.

The threshold is calculated by AI based on a history of previous counts. Check this alert hourly. Raise this only if the dynamic threshold has been exceeded for the last 4 hours.

No Event alerts are a “canary in a coal mine”. They are not critical alerts but it means something unusual has happened. For example, a customer system has gone offline. Thus, these should be investigated as soon as possible.

{

"type": "microsoft.insights/metricAlerts",

"apiVersion": "2018-03-01",

"name": "[concat('metricAlerts_', variables('LogicAppName'), '-NoEvents')]",

"location": "global",

"dependsOn": [

"[resourceId('Microsoft.Logic/workflows', variables('LogicAppName'))]"

],

"properties": {

"description": "[concat(parameters('LogicAppLocation'), variables('LogicAppName'), 'Dynamic alerts if the number of runs fall below a threshold based on historic counts.')]",

"severity": 3,

"enabled": true,

"scopes": [

"[resourceId('Microsoft.Logic/workflows', variables('LogicAppName'))]"

],

"evaluationFrequency": "PT1H",

"windowSize": "PT1H",

"criteria": {

"odata.type": "Microsoft.Azure.Monitor.MultipleResourceMultipleMetricCriteria",

"allOf": [

{

"criterionType": "DynamicThresholdCriterion",

"name": "1st criterion",

"metricName": "RunsCompleted",

"dimensions": [],

"operator": "LessThan",

"alertSensitivity": "Medium",

"failingPeriods": {

"numberOfEvaluationPeriods": "4",

"minFailingPeriodsToAlert": "4"

},

"timeAggregation": "Count"

}

]

},

"actions": [

{

"actionGroupId": "[resourceId('microsoft.insights/actionGroups', variables('actionGroupName'))]",

"webHookProperties": {}

}

]

}

},- Action group configuration.

Most importantly, set up two action groups for

- Severity 1 alerts that must be investigated immediately because this could be a lost order.

- Severity 2 alerts that must be investigated within one day.

The sev-1 action group looks like this

{

"type": "microsoft.insights/actionGroups",

"apiVersion": "2018-03-01",

"name": "[variables('actionGroupSev1Name')]",

"location": "Global",

"properties": {

"groupShortName": "[variables('actionGroupSev1ShortName')]",

"enabled": "[parameters('enableActionGroup')]",

"emailReceivers": [

{

"name": "Email edialerts_-EmailAction-",

"emailAddress": "phonehome@bidone.co.nz"

},

{

"name": "Email Slack channel_-EmailAction-",

"emailAddress": "phonehome@bidone.slack.com",

"useCommonAlertSchema": false

}

],

"smsReceivers": [],

"webhookReceivers": [

{

"name": "BidOneSev1Alert",

"serviceUri": "https://phonehome.azurewebsites.net/api/Severity1Alert",

"useCommonAlertSchema": true,

"useAadAuth": false

}

],

"itsmReceivers": [],

"azureAppPushReceivers": [],

"automationRunbookReceivers": [],

"voiceReceivers": [],

"logicAppReceivers": [],

"azureFunctionReceivers": []

}

} The alert to the Slack channel and email group are out of the box Azure API connectors. The webhook is a custom Azure function which looks up the phone number of the person on call and then rings that number leaving an automated voice message.

The sev-2 action group looks like

{

"type": "microsoft.insights/actionGroups",

"apiVersion": "2018-03-01",

"name": "[variables('actionGroupName')]",

"location": "Global",

"properties": {

"groupShortName": "[variables('actionGroupShortName')]",

"enabled": "[parameters('enableActionGroup')]",

"emailReceivers": [

{

"name": "Email edialerts_-EmailAction-",

"emailAddress": "notsourgent@bidone.co.nz"

},

{

"name": "Email slack channel",

"emailAddress": "notsourgentsazgcdhdii@bidone.slack.com"

}

],

"smsReceivers": [],

"webhookReceivers": [],

"itsmReceivers": [],

"azureAppPushReceivers": [],

"automationRunbookReceivers": [],

"voiceReceivers": [],

"logicAppReceivers": [],

"azureFunctionReceivers": []

}

},Conclusion

In summary, I have shown how we use three simple alerts and two action groups to monitor our Logic App farm 24 hours by 365 days a year.